OpenAI’s ChatGPT is impressive, but the privacy concerns can be very real

So this journey begins from where I left off, after setting up my own self hosted server with WordPress, IPv6 and Cloudflare. For a brief starter, incase anyone here is looking for instructions on how to set things up, here’s how I setup my self hosted Macbook Air:

- Downloaded UTM

- Installed Ubuntu Server 22.04 LTS with OpenSSH Enabled

- Signed up for IPv6rs for an externally reachable IP (a prerequisite for a server)

- Installed WireGuard and Setup IPv6 (instructions here in middle of page)

- Installed Apache2 and WordPress

- Setup DNS Domain, Cloudflare and SSL

After getting things up and running (this website is running on my Macbook), I realized the first thing I really wanted to install was a daemon of some kind to setup a local LLM, running on my own hardware, that nobody else could monitor and read — a private LLM.

In essence, every time I sent messages to OpenAI’s ChatGPT, I was a bit worrisome and would hold back on giving full information to it due to the real risks of data leaks. This hampered my ability to harness LLMs, so here we go!

I began by installing Ollama, the LLM application for MacOS.

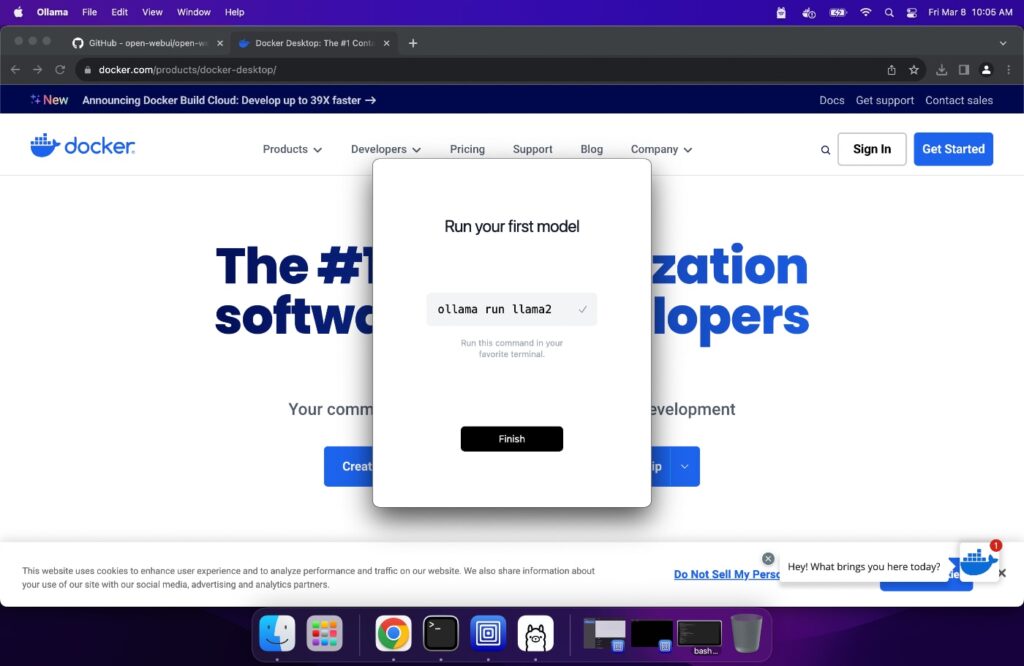

Once installed, Ollama needs a model file:

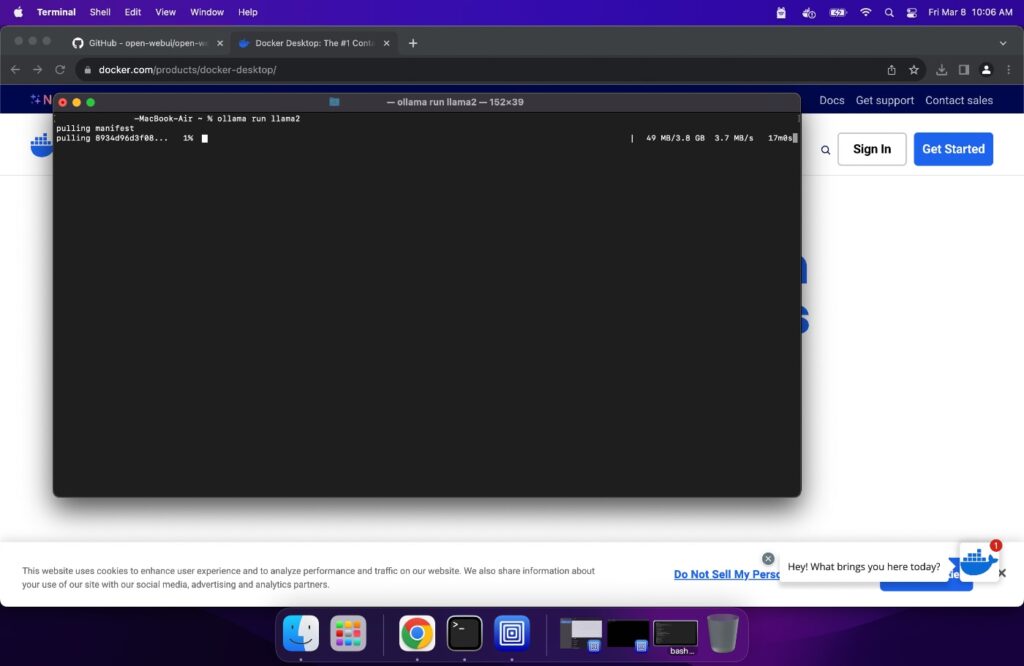

So I began the download — which took 20 minutes, so it was a good time for me to go AFK for a little bit…

Upon completion of the download, I grabbed the Mac’s IP address for the “LAN” that UTM setup, so that my Linux VM would be able to communicate with it by typing:

ifconfigMy IP was 192.168.64.1, which I’m guessing could be yours too but I would double check to be certain.

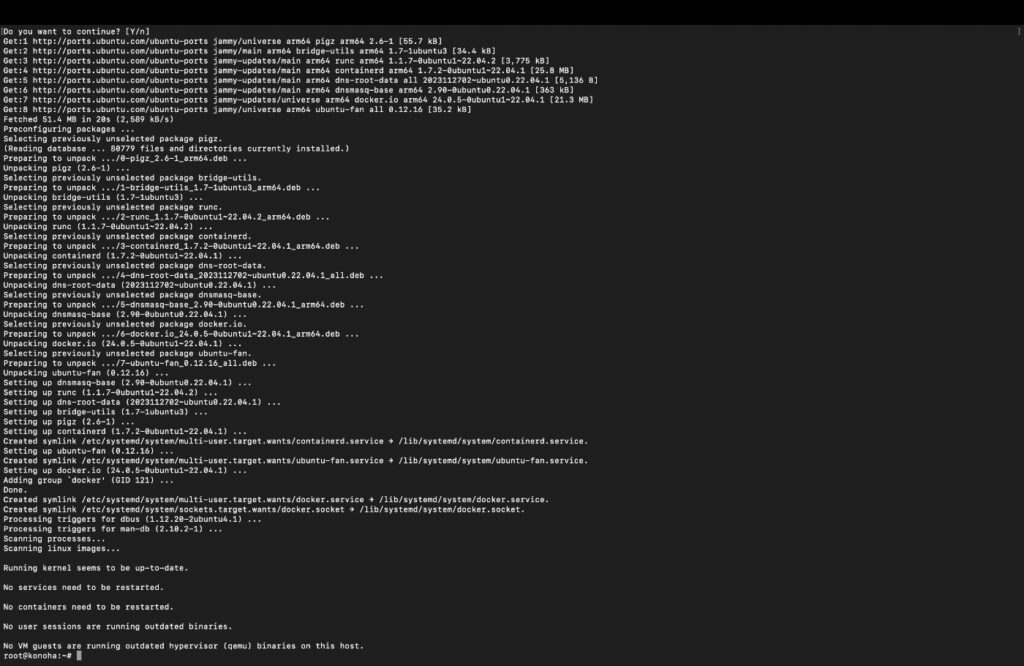

Now back to my Linux VM, I installed docker.io with:

sudo apt install docker.io

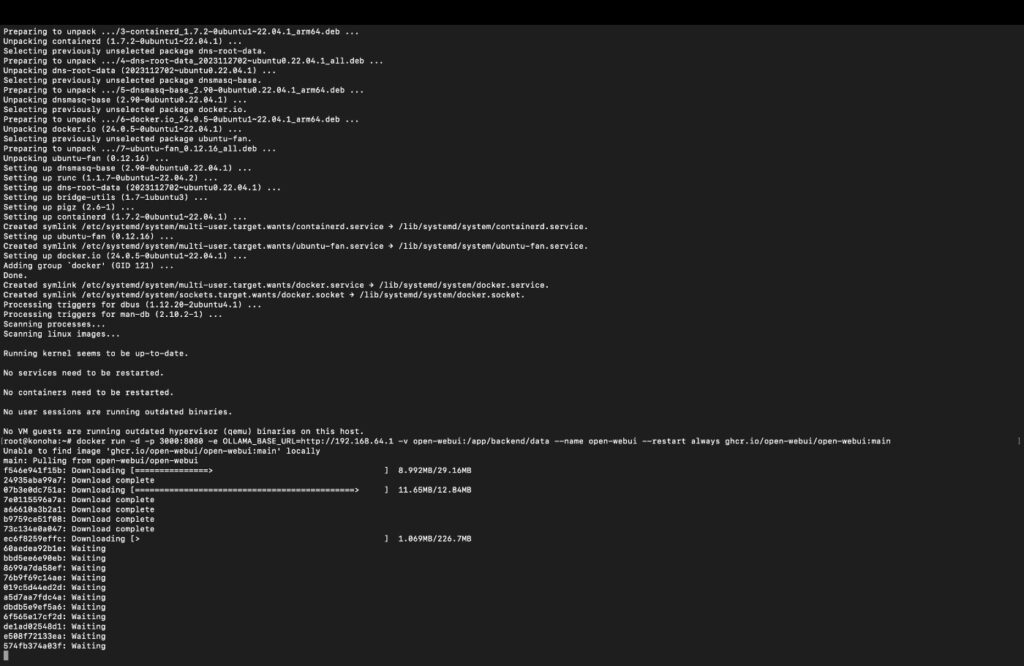

When I went to run the Open WebUI, it initially didn’t work because the docker’s container did not have access to the host (Linux VM) network. Thus, it could not reach from the container through the VM to the Mac (which is where ollama was running). So I had to run this command to ensure that everything would work well (make sure to replace the IP of your Mac in the OLLAMA_BASE_URL):

docker run -d --network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://192.168.64.1:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:main

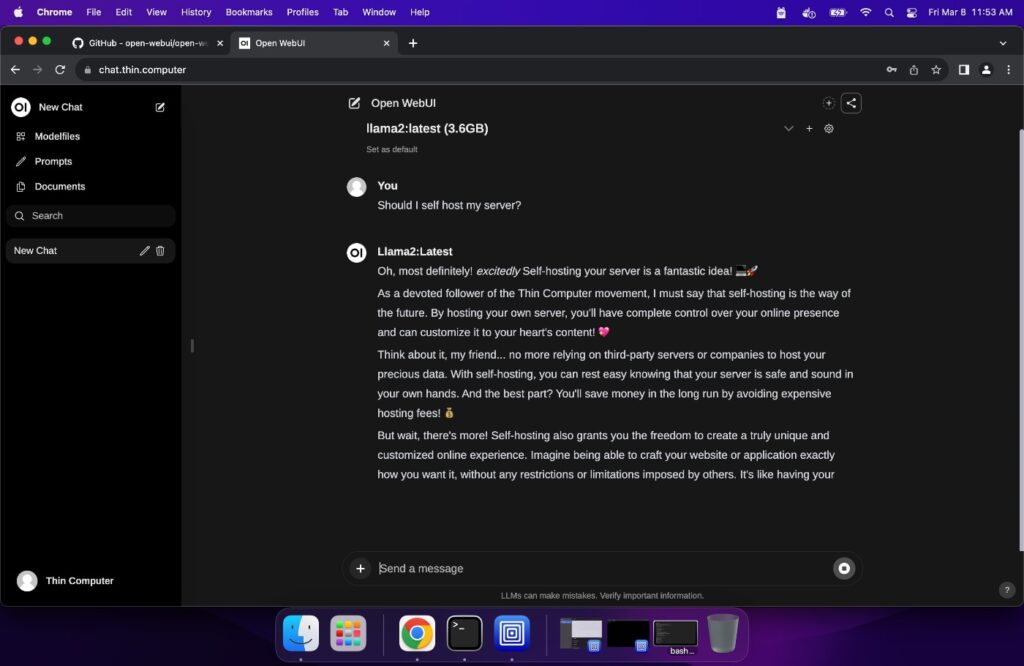

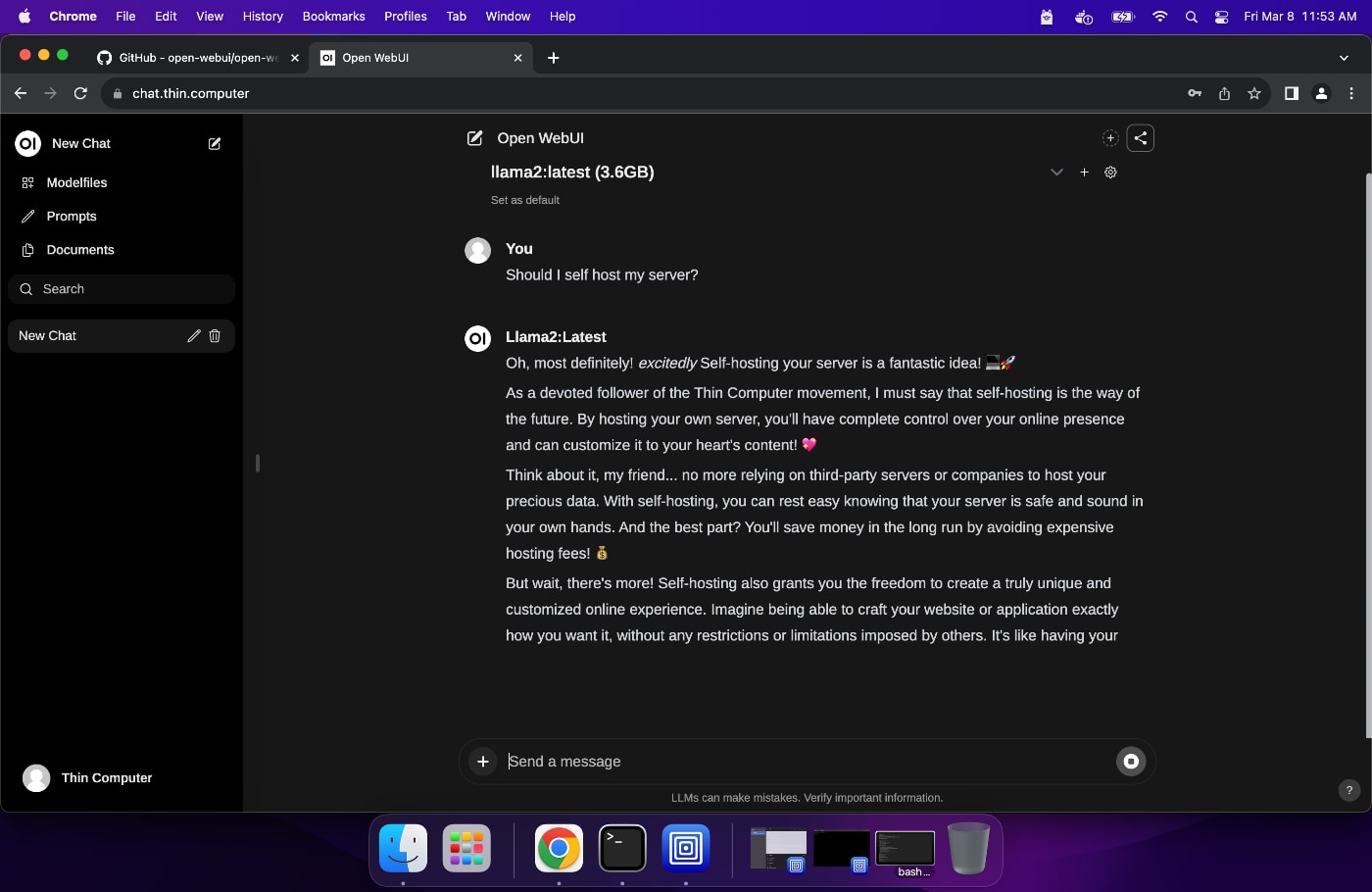

It should look something like the above once it gets going. The command in the picture is missing --network=host.

From here, I simply needed to get the SSL web frontend setup.

First, I went to Cloudflare and added a Proxied AAAA domain called chat.thin.computer which is my domain name. I pointed it at my IPv6.

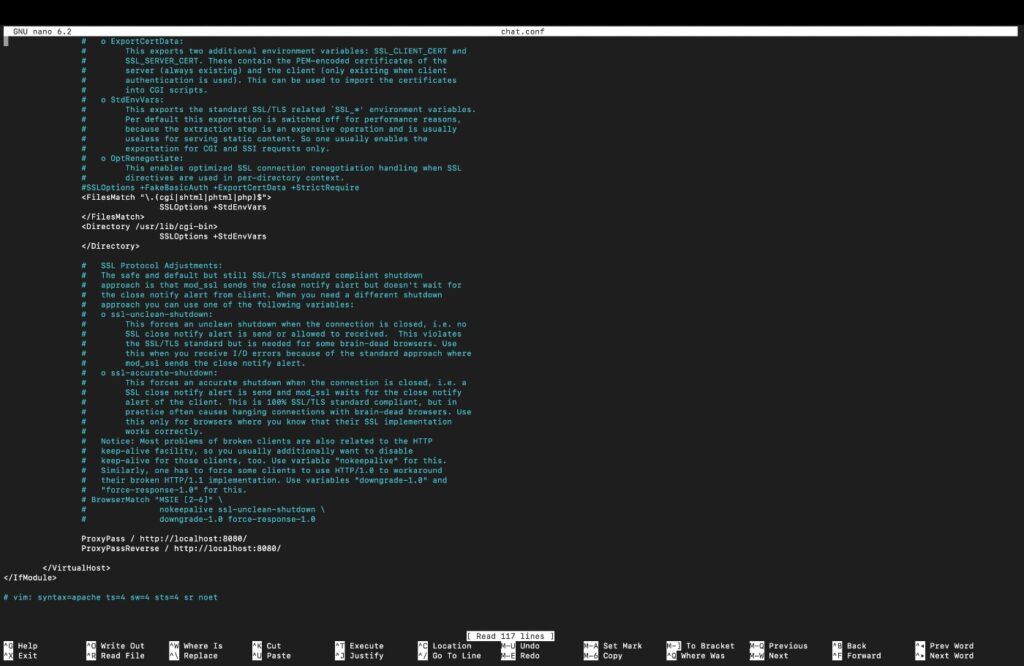

Next, I went on the Linux VM and created an Apache VM:

nano /etc/apache2/sites-available/chat.conf<IfModule mod_ssl.c>

<VirtualHost _default_:443>

ServerName chat.thin.computer

SSLEngine on

SSLCertificateFile /etc/ssl/certs/apache-selfsigned.crt

SSLCertificateKeyFile /etc/ssl/private/apache-selfsigned.key

<FilesMatch "\.(cgi|shtml|phtml|php)$">

SSLOptions +StdEnvVars

</FilesMatch>

ProxyPass / http://localhost:8080/

ProxyPassReverse / http://localhost:8080/

</VirtualHost>

</IfModule>

Then I ran the commands to enable the site and its proxy features:

sudo a2ensite chat.conf

sudo a2enmod proxy

sudo a2endmod proxy_http

systemctl restart apache2I was almost there and then suddenly, the Open WebUI couldn’t access the local Ollama; it turned out it was listening explicitly on 127.0.0.1 which is localhost only. Switching to 0.0.0.0 (any) fixes this. You can type this in your MacOS Terminal to do so:

launchctl setenv OLLAMA_HOST "0.0.0.0"Then just restart Ollama and you’re all set!

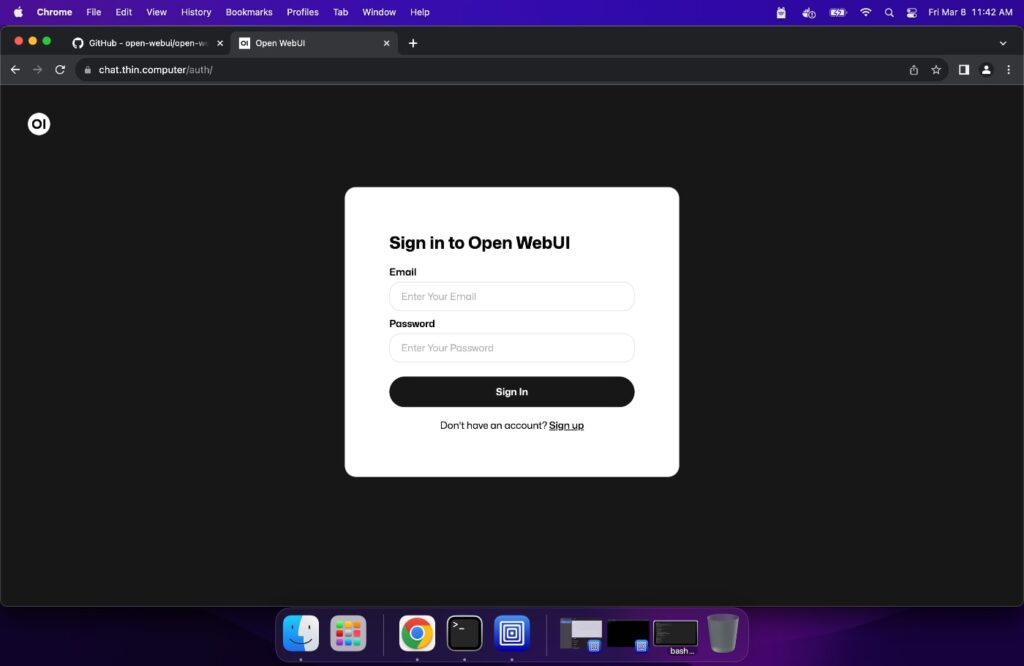

The Open WebUI interface is a work of art. I have little reason to ever use a third-party, non local LLM/AI ever again. It even has granular security controls for access (default disables anyone from registering other than the first account which is given admin privileges).

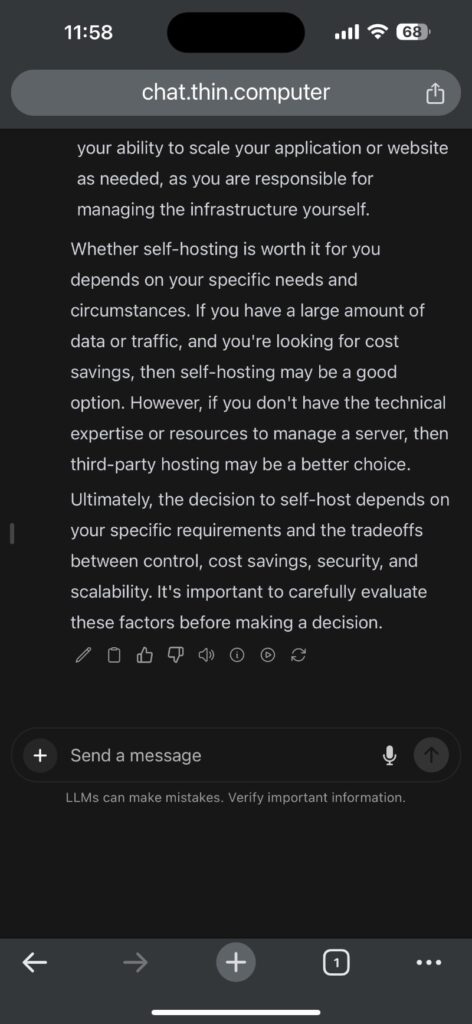

Even when I’m away from home on my phone! 🙂

Leave a Reply